Dear all,

I had compiled the Abinit-6.4.1 on a dual quad-core cpu system, and the compilation is successful. But when I ran the abinit with 8 core as "mpiexec -n 8 ......", I found it was much slower than only single-core and the cpu time of 8 core was nearly 2 times longer. I had changed the impi by MPICH2 or OpenMPI, but the problem is still exist. Could anyone give me some suggestions. Thanks!

The system environment:

Intel Xeon E5430 * 2, 32G RAM

RHEL sever 5.5

ifort and icc 11.1 and impi 4.0.0.025 (MPICH2 1.3.1 or openmpi 1.4.3)

The basic configuration is,

../configure FC=mpiifort CC=mpiicc CXX=mpiicpc --enable-mpi=yes --with-mpi-includes="-I/opt/intel/impi/4.0.0.025/intel64/include" --with-mpi-libs="-L/opt/intel/impi/4.0.0.025/intel64/lib"

and the log files is

by the way ,is there any different between "make", "make mj4" and "make multi multi......" and is it necessary adding "mj4" or "multi multi......" to build the parallel abinit.

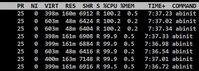

There were 8 process when abinit was running:

Problem with multi-core parallel computation

Moderators: fgoudreault, mcote

Forum rules

Please have a look at ~abinit/doc/config/build-config.ac in the source package for detailed and up-to-date information about the configuration of Abinit 8 builds.

For a video explanation on how to build Abinit 7.x for Linux, please go to: http://www.youtube.com/watch?v=DppLQ-KQA68.

IMPORTANT: when an answer solves your problem, please check the little green V-like button on its upper-right corner to accept it.

Please have a look at ~abinit/doc/config/build-config.ac in the source package for detailed and up-to-date information about the configuration of Abinit 8 builds.

For a video explanation on how to build Abinit 7.x for Linux, please go to: http://www.youtube.com/watch?v=DppLQ-KQA68.

IMPORTANT: when an answer solves your problem, please check the little green V-like button on its upper-right corner to accept it.

Re: Problem with multi-core parallel computation

Hi lxbyf,

I was running abinit with Xeon E5530x2 and I experimented the same problems.

There is another thread concerning this subject (multithreading).

The problem is a hardware problem. Your processors are 2 quadri-cores where which one use "hypertreding technology" and "Turboboost" which permit to

increase the clock speed when the temperature (so the work on the chip) is small.

So when you increase the number of cores you decrease the relative clock speed and the execution time does not scale with the number of procs.

Now, I was looking the specifications of your Xeon E5430 and it does not seem to have the same technologies.

So my case doesn't seem apply to your case. You should try to run with 1 and 2 cpu to see if the computation scale at least in this case.

Another important point. If you are newer with abinit is possible that you didn't put the good parallelization option in the input file.

This will imply that you code is running the same code on all procs without a parallelization, so in this case you should have that:

time_single = time_eight/8.

good luck

marco

I was running abinit with Xeon E5530x2 and I experimented the same problems.

There is another thread concerning this subject (multithreading).

The problem is a hardware problem. Your processors are 2 quadri-cores where which one use "hypertreding technology" and "Turboboost" which permit to

increase the clock speed when the temperature (so the work on the chip) is small.

So when you increase the number of cores you decrease the relative clock speed and the execution time does not scale with the number of procs.

Now, I was looking the specifications of your Xeon E5430 and it does not seem to have the same technologies.

So my case doesn't seem apply to your case. You should try to run with 1 and 2 cpu to see if the computation scale at least in this case.

Another important point. If you are newer with abinit is possible that you didn't put the good parallelization option in the input file.

This will imply that you code is running the same code on all procs without a parallelization, so in this case you should have that:

time_single = time_eight/8.

good luck

marco

Marco Mancini

LUTH, Observatoire de Paris

5, place Jules Janssen

92190 MEUDON - FRANCE

LUTH, Observatoire de Paris

5, place Jules Janssen

92190 MEUDON - FRANCE

- Alain_Jacques

- Posts: 279

- Joined: Sat Aug 15, 2009 9:34 pm

- Location: Université catholique de Louvain - Belgium

Re: Problem with multi-core parallel computation

Hello lxbyf,

I had a look on your log file. A couple of things seem odd to me, especially the fact that a few mpicc tests use gfortran flags instead of the expected Intel backend compiler syntax and a complain about unsuitable mpiexec version. Anyway make sure that you have working mpixxx binaries (check with the "showme" flag that they are calling the right compiler). You mentioned that Abinit compiled fine; did it pass the test suite? I would also suggest to use the latest 6.4.2 production release - no harm to have less bugs. I assume that "mpiexec -n 8 ......" is a typo; use -np 8 instead.

It is quite difficult to figure out how you tested the efficiency of the parallel version. Would you please provide a few timings, for example from one of the parallel test suite component. Copy the tests/paral/Input/t_kpt+spin.* files to a new directory, add the tests/Psps_for_tests/26fe.pspnc pseudo file and modify the t_kpt+spin.files last line to reflect the new location. Check the timing for sequential, -np 2, 4, 8 ... it should be quite linear.

Kind regards,

Alain

I had a look on your log file. A couple of things seem odd to me, especially the fact that a few mpicc tests use gfortran flags instead of the expected Intel backend compiler syntax and a complain about unsuitable mpiexec version. Anyway make sure that you have working mpixxx binaries (check with the "showme" flag that they are calling the right compiler). You mentioned that Abinit compiled fine; did it pass the test suite? I would also suggest to use the latest 6.4.2 production release - no harm to have less bugs. I assume that "mpiexec -n 8 ......" is a typo; use -np 8 instead.

It is quite difficult to figure out how you tested the efficiency of the parallel version. Would you please provide a few timings, for example from one of the parallel test suite component. Copy the tests/paral/Input/t_kpt+spin.* files to a new directory, add the tests/Psps_for_tests/26fe.pspnc pseudo file and modify the t_kpt+spin.files last line to reflect the new location. Check the timing for sequential, -np 2, 4, 8 ... it should be quite linear.

Kind regards,

Alain

Re: Problem with multi-core parallel computation

Hello everyone,

Thank you for all reply above, it is very useful. I am a newer with abinit, and maybe I test some examples of the beginning tutorial which are not suit for parallelization. And when I test other input files, such as the files "tests/paral/Input/t_kpt+spin*" mentioned, the parallelization is quit efficiency. I found some input files are effective and some are not, so should I put some parallelization options in the input file? And I will check the flags for a new compilation.

Unfortunately a new problem appeared in the structure optimization. Wuould you pleased give me some suggestions? Thanks very much.

http://forum.abinit.org/viewtopic.php?f=9&t=814

Best regards,

lxbyf

Thank you for all reply above, it is very useful. I am a newer with abinit, and maybe I test some examples of the beginning tutorial which are not suit for parallelization. And when I test other input files, such as the files "tests/paral/Input/t_kpt+spin*" mentioned, the parallelization is quit efficiency. I found some input files are effective and some are not, so should I put some parallelization options in the input file? And I will check the flags for a new compilation.

Unfortunately a new problem appeared in the structure optimization. Wuould you pleased give me some suggestions? Thanks very much.

http://forum.abinit.org/viewtopic.php?f=9&t=814

Best regards,

lxbyf

- Alain_Jacques

- Posts: 279

- Joined: Sat Aug 15, 2009 9:34 pm

- Location: Université catholique de Louvain - Belgium

Re: Problem with multi-core parallel computation

Have a look at the tutorial and the corresponding input variables ... start with paral_kgb at http://www.abinit.org/documentation/helpfiles/for-v6.4/input_variables/varpar.html#paral_kgb.

The explanation is somewhat catchy. paral_kgb=0 is the default i.e. Abinit will parallelize on k points (assuming that you are calling a parallel Abinit build with mpirun or mpiexec).If paral_kgb=1, extra parallelization (band and/or fft) is enabled which means that you have to set npband and/or npfft otherwise Abinit stops with an error condition (npfft alone is not enough). When the variable is negative, paral_kgb=-n (with n=2,3, ...), Abinit will analyze the possible combinations of parallelization on 2 ... up to n slots (threads here for your SMP system) and give you a clue on the efficiency of the possible values of npkpt, npband and npfft for your input file.

So if you "recycle" any kind of test file by running mpiexec -np ... paral_kgb is probably not mentioned and then has the default value; there is no guarantee that the run time of that random test will benefit from k point parallelization even if all processors look busy.

Kind regards,

Alain

The explanation is somewhat catchy. paral_kgb=0 is the default i.e. Abinit will parallelize on k points (assuming that you are calling a parallel Abinit build with mpirun or mpiexec).If paral_kgb=1, extra parallelization (band and/or fft) is enabled which means that you have to set npband and/or npfft otherwise Abinit stops with an error condition (npfft alone is not enough). When the variable is negative, paral_kgb=-n (with n=2,3, ...), Abinit will analyze the possible combinations of parallelization on 2 ... up to n slots (threads here for your SMP system) and give you a clue on the efficiency of the possible values of npkpt, npband and npfft for your input file.

So if you "recycle" any kind of test file by running mpiexec -np ... paral_kgb is probably not mentioned and then has the default value; there is no guarantee that the run time of that random test will benefit from k point parallelization even if all processors look busy.

Kind regards,

Alain