flowtk Package¶

flowtk Package¶

- abipy.flowtk.flow_main(main)[source]¶

This decorator is used to decorate main functions producing Flows. It adds the initialization of the logger and an argument parser that allows one to select the loglevel, the workdir of the flow as well as the YAML file with the parameters of the TaskManager. The main function shall have the signature:

main(options)

where options in the container with the command line options generated by ArgumentParser.

- Parameters:

main – main function.

abiinspect Module¶

This module provides objects to inspect the status of the Abinit tasks at run-time. by extracting information from the main output file (text format).

- abipy.flowtk.abiinspect.plottable_from_outfile(filepath: str)[source]¶

Factory function that returns a plottable object by inspecting the main output file of abinit Returns None if it is not able to detect the class to instantiate.

- class abipy.flowtk.abiinspect.ScfCycle(fields: dict)[source]¶

Bases:

MappingIt essentially consists of a dictionary mapping string to list of floats containing the data at the different iterations.

- MAGIC = 'Must be defined by the subclass.'¶

- classmethod from_file(filepath: str) ScfCycle[source]¶

Read the first occurrence of ScfCycle from file.

- classmethod from_stream(stream) ScfCycle | None[source]¶

Read the first occurrence of ScfCycle from stream.

- Returns:

None if no ScfCycle entry is found.

- plot(ax_list=None, fontsize=8, **kwargs) Any[source]¶

Uses matplotlib to plot the evolution of the SCF cycle.

- Parameters:

ax_list – List of axes. If None a new figure is produced.

fontsize – legend fontsize.

kwargs – keyword arguments are passed to ax.plot

Returns: matplotlib figure

Keyword arguments controlling the display of the figure:

kwargs

Meaning

title

Title of the plot (Default: None).

show

True to show the figure (default: True).

savefig

“abc.png” or “abc.eps” to save the figure to a file.

size_kwargs

Dictionary with options passed to fig.set_size_inches e.g. size_kwargs=dict(w=3, h=4)

tight_layout

True to call fig.tight_layout (default: False)

ax_grid

True (False) to add (remove) grid from all axes in fig. Default: None i.e. fig is left unchanged.

ax_annotate

Add labels to subplots e.g. (a), (b). Default: False

fig_close

Close figure. Default: False.

plotly

Try to convert mpl figure to plotly.

- plotly(fontsize=12, **kwargs)[source]¶

Uses plotly to plot the evolution of the SCF cycle.

- Parameters:

fontsize – legend fontsize.

kwargs – keyword arguments are passed to go.Scatter.

Returns: plotly figure

Keyword arguments controlling the display of the figure: ================ ==================================================================== kwargs Meaning ================ ==================================================================== title Title of the plot (Default: None). show True to show the figure (default: True). hovermode True to show the hover info (default: False) savefig “abc.png” , “abc.jpeg” or “abc.webp” to save the figure to a file. write_json Write plotly figure to write_json JSON file.

Inside jupyter-lab, one can right-click the write_json file from the file menu and open with “Plotly Editor”. Make some changes to the figure, then use the file menu to save the customized plotly plot. Requires jupyter labextension install jupyterlab-chart-editor. See https://github.com/plotly/jupyterlab-chart-editor

- renderer (str or None (default None)) –

A string containing the names of one or more registered renderers (separated by ‘+’ characters) or None. If None, then the default renderers specified in plotly.io.renderers.default are used. See https://plotly.com/python-api-reference/generated/plotly.graph_objects.Figure.html

config (dict) A dict of parameters to configure the figure. The defaults are set in plotly.js. chart_studio True to push figure to chart_studio server. Requires authenticatios.

Default: False.

- template Plotly template. See https://plotly.com/python/templates/

- [“plotly”, “plotly_white”, “plotly_dark”, “ggplot2”,

“seaborn”, “simple_white”, “none”]

Default is None that is the default template is used.

- class abipy.flowtk.abiinspect.GroundStateScfCycle(fields: dict)[source]¶

Bases:

ScfCycleResult of the Ground State self-consistent cycle.

- MAGIC = 'iter Etot(hartree)'¶

- class abipy.flowtk.abiinspect.D2DEScfCycle(fields: dict)[source]¶

Bases:

ScfCycleResult of the Phonon self-consistent cycle.

- MAGIC = 'iter 2DEtotal(Ha)'¶

- class abipy.flowtk.abiinspect.PhononScfCycle(fields: dict)[source]¶

Bases:

D2DEScfCycleIterations of the DFPT SCF cycle for phonons.

- class abipy.flowtk.abiinspect.CyclesPlotter[source]¶

Bases:

objectRelies on the plot method of cycle objects to build multiple subfigures.

- combiplot(fontsize=8, **kwargs) Any[source]¶

Compare multiple cycels on a grid: one subplot per quantity, all cycles on the same subplot.

- Parameters:

fontsize – Legend fontsize.

Keyword arguments controlling the display of the figure:

kwargs

Meaning

title

Title of the plot (Default: None).

show

True to show the figure (default: True).

savefig

“abc.png” or “abc.eps” to save the figure to a file.

size_kwargs

Dictionary with options passed to fig.set_size_inches e.g. size_kwargs=dict(w=3, h=4)

tight_layout

True to call fig.tight_layout (default: False)

ax_grid

True (False) to add (remove) grid from all axes in fig. Default: None i.e. fig is left unchanged.

ax_annotate

Add labels to subplots e.g. (a), (b). Default: False

fig_close

Close figure. Default: False.

plotly

Try to convert mpl figure to plotly.

- class abipy.flowtk.abiinspect.Relaxation(cycles: list[GroundStateScfCycle])[source]¶

Bases:

IterableA list of

GroundStateScfCycleobjects.Note

Forces, stresses and crystal structures are missing. This object is mainly used to analyze the behavior of the Scf cycles during the structural relaxation. A more powerful and detailed analysis can be obtained by using the HIST.nc file.

- classmethod from_file(filepath: str) Relaxation | None[source]¶

Initialize the object from the Abinit main output file.

- classmethod from_stream(stream) Relaxation | None[source]¶

Extract data from stream. Returns None if some error occurred.

- history()[source]¶

dictionary of lists with the evolution of the data as function of the relaxation step.

- slideshow(**kwargs)[source]¶

Uses matplotlib to plot the evolution of the structural relaxation.

- Parameters:

ax_list – List of axes. If None a new figure is produced.

Returns: matplotlib figure

- plot(ax_list=None, fontsize=8, **kwargs) Any[source]¶

Plot relaxation history i.e. the results of the last iteration of each SCF cycle.

- Parameters:

ax_list – List of axes. If None a new figure is produced.

fontsize – legend fontsize.

kwargs – keyword arguments are passed to ax.plot

Returns: matplotlib figure

Keyword arguments controlling the display of the figure:

kwargs

Meaning

title

Title of the plot (Default: None).

show

True to show the figure (default: True).

savefig

“abc.png” or “abc.eps” to save the figure to a file.

size_kwargs

Dictionary with options passed to fig.set_size_inches e.g. size_kwargs=dict(w=3, h=4)

tight_layout

True to call fig.tight_layout (default: False)

ax_grid

True (False) to add (remove) grid from all axes in fig. Default: None i.e. fig is left unchanged.

ax_annotate

Add labels to subplots e.g. (a), (b). Default: False

fig_close

Close figure. Default: False.

plotly

Try to convert mpl figure to plotly.

- exception abipy.flowtk.abiinspect.YamlTokenizerError[source]¶

Bases:

ExceptionExceptions raised by

YamlTokenizer.

- class abipy.flowtk.abiinspect.YamlTokenizer(filename: str)[source]¶

Bases:

IteratorProvides context-manager support so you can use it in a with statement.

- Error¶

alias of

YamlTokenizerError

- seek(offset[, whence]) None. Move to new file position.[source]¶

Argument offset is a byte count. Optional argument whence defaults to 0 (offset from start of file, offset should be >= 0); other values are 1 (move relative to current position, positive or negative), and 2 (move relative to end of file, usually negative, although many platforms allow seeking beyond the end of a file). If the file is opened in text mode, only offsets returned by tell() are legal. Use of other offsets causes undefined behavior. Note that not all file objects are seekable.

- next()[source]¶

Returns the first YAML document in stream.

Warning

Assume that the YAML document are closed explicitely with the sentinel ‘…’

- all_yaml_docs() list[source]¶

Returns a list with all the YAML docs found in stream. Seek the stream before returning.

Warning

Assume that all the YAML docs (with the exception of the last one) are closed explicitely with the sentinel ‘…’

- abipy.flowtk.abiinspect.yaml_read_kpoints(filename: str, doc_tag: str = '!Kpoints') ndarray[source]¶

Read the K-points from file. Return numpy array

- abipy.flowtk.abiinspect.yaml_read_irred_perts(filename: str, doc_tag='!IrredPerts') list[AttrDict][source]¶

Read the list of irreducible perturbations from file.

- class abipy.flowtk.abiinspect.YamlDoc(text: str, lineno: int, tag=None)[source]¶

Bases:

objectHandy object that stores that YAML document, its main tag and the position inside the file.

- text¶

- lineno¶

- tag¶

abiobjects Module¶

- class abipy.flowtk.abiobjects.LujForSpecie(l, u, j, unit)[source]¶

Bases:

LdauForSpecieThis object stores the value of l, u, j used for a single atomic specie.

- class abipy.flowtk.abiobjects.LdauParams(usepawu, structure)[source]¶

Bases:

objectThis object stores the parameters for LDA+U calculations with the PAW method It facilitates the specification of the U-J parameters in the Abinit input file. (see to_abivars). The U-J operator will be applied only on the atomic species that have been selected by calling lui_for_symbol.

To setup the Abinit variables for a LDA+U calculation in NiO with a U value of 5 eV applied on the nickel atoms:

luj_params = LdauParams(usepawu=1, structure=nio_structure) # Apply U-J on Ni only. u = 5.0 luj_params.luj_for_symbol("Ni", l=2, u=u, j=0.1*u, unit="eV") print(luj_params.to_abivars())

- class abipy.flowtk.abiobjects.LexxParams(structure)[source]¶

Bases:

objectThis object stores the parameters for local exact exchange calculations with the PAW method It facilitates the specification of the LEXX parameters in the Abinit input file. (see to_abivars). The LEXX operator will be applied only on the atomic species that have been selected by calling lexx_for_symbol.

To perform a LEXX calculation for NiO in which the LEXX is computed only for the l=2 channel of the nickel atoms:

lexx_params = LexxParams(nio_structure) lexx_params.lexx_for_symbol("Ni", l=2) print(lexc_params.to_abivars())

abiphonopy Module¶

Interface between phonopy and the AbiPy workflow model.

- abipy.flowtk.abiphonopy.atoms_from_structure(structure: Structure)[source]¶

Convert a pymatgen Structure into a phonopy Atoms object.

- abipy.flowtk.abiphonopy.structure_from_atoms(atoms) Structure[source]¶

Convert a phonopy Atoms object into a abipy Structure.

- class abipy.flowtk.abiphonopy.PhonopyWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work computes the inter-atomic force constants with phonopy.

- scdims(3)¶

numpy arrays with the number of cells in the supercell along the three reduced directions

- phonon¶

Phonopyobject used to construct the supercells with displaced atoms.

- phonopy_tasks¶

List of

ScfTask. Each task compute the forces in one perturbed supercell.

- bec_tasks¶

- cpdata2dst¶

If not None, the work will copy the output results to the outdir of the flow once all_ok is invoked. Note that cpdata2dst must be an absolute path.

- classmethod from_gs_input(gs_inp, scdims, phonopy_kwargs=None, displ_kwargs=None) PhonopyWork[source]¶

Build the work from an

AbinitInputobject representing a GS calculations.- Parameters:

gs_inp –

AbinitInputobject representing a GS calculation in the initial unit cell.scdims – Number of unit cell replicas along the three reduced directions.

phonopy_kwargs – (Optional) dictionary with arguments passed to Phonopy constructor.

displ_kwargs – (Optional) dictionary with arguments passed to generate_displacements.

Return: PhonopyWork instance.

- class abipy.flowtk.abiphonopy.PhonopyGruneisenWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work computes the Grüneisen parameters with phonopy. The workflow is as follows:

It is necessary to run three phonon calculations. One is calculated at the equilibrium volume and the remaining two are calculated at the slightly larger volume and smaller volume than the equilibrium volume. The unitcells at these volumes have to be fully relaxed under the constraint of each volume.

- scdims(3)¶

numpy arrays with the number of cells in the supercell along the three reduced directions.

- classmethod from_gs_input(gs_inp, voldelta, scdims, phonopy_kwargs=None, displ_kwargs=None) PhonopyGruneisenWork[source]¶

Build the work from an

AbinitInputobject representing a GS calculations.- Parameters:

gs_inp –

AbinitInputobject representing a GS calculation in the initial unit cell.voldelta – Absolute increment for unit cell volume. The three volumes are: [v0 - voldelta, v0, v0 + voldelta] where v0 is taken from gs_inp.structure.

scdims – Number of unit cell replicas along the three reduced directions.

phonopy_kwargs – (Optional) dictionary with arguments passed to Phonopy constructor.

displ_kwargs – (Optional) dictionary with arguments passed to generate_displacements.

Return: PhonopyGruneisenWork instance.

abitimer Module¶

dfpt_works Module¶

Work subclasses related to DFTP.

- class abipy.flowtk.dfpt_works.ElasticWork(workdir=None, manager=None)[source]¶

Bases:

Work,MergeDdbThis Work computes the elastic constants and (optionally) the piezoelectric tensor. It consists of response function calculations for:

rigid-atom elastic tensor

rigid-atom piezoelectric tensor

interatomic force constants at gamma

Born effective charges

The structure is assumed to be already relaxed

Create a Flow for phonon calculations. The flow has one works with:

1 GS Task

3 DDK Task

4 Phonon Tasks (Gamma point)

6 Elastic tasks (3 uniaxial + 3 shear strain)

The Phonon tasks and the elastic task will read the DDK produced at the beginning

- classmethod from_scf_input(scf_input: AbinitInput, with_relaxed_ion=True, with_piezo=False, with_dde=False, tolerances=None, den_deps=None, manager=None) ElasticWork[source]¶

- Parameters:

scf_input

with_relaxed_ion

with_piezo

with_dde – Compute electric field perturbations.

tolerances – Dict of tolerances

den_deps

manager

Similar to from_scf_task, the difference is that this method requires an input for SCF calculation instead of a ScfTask. All the tasks (Scf + Phonon) are packed in a single Work whereas in the previous case we usually have multiple works.

- class abipy.flowtk.dfpt_works.NscfDdksWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work requires a DEN file and computes the KS energies with a non self-consistent task with a dense k-mesh and empty states. This task is then followed by the computation of the DDK matrix elements with nstep = 1 (the first order change of the wavefunctions is not converged but we only need the matrix elements) Mainly used to prepare optic calculations or other post-processing steps requiring the DDKs.

- classmethod from_scf_task(scf_task: ScfTask, ddk_ngkpt, ddk_shiftk, ddk_nband, manager=None) NscfDdksWork[source]¶

Build NscfDdksWork from a scf_task.

- Parameters:

scf_task – GS task. Must produce the DEN file required for the NSCF run.

ddk_ngkpt – k-mesh used for the NSCF run and the non self-consistent DDK tasks.

ddk_shiftk – k-mesh shifts

ddk_nband – Number of bands (occupied + empty) used in the NSCF task and the DDKs tasks.

manager – TaskManager instance. Use default if None.

effmass_works Module¶

Work subclasses related to effective mass calculations.

- class abipy.flowtk.effmass_works.EffMassLineWork(workdir=None, manager=None)[source]¶

Bases:

WorkWork for the computation of effective masses via finite differences along a k-line. Useful for cases such as NC+SOC where DFPT is not implemented or if one is interested in the non-parabolic behaviour of the energy dispersion.

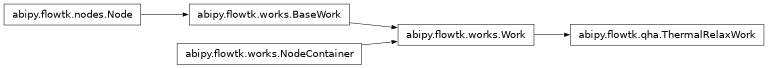

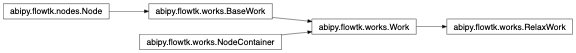

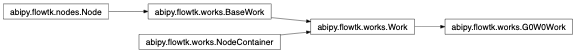

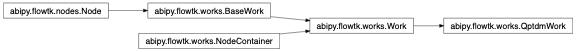

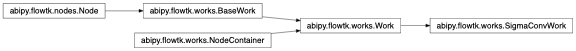

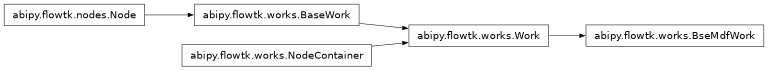

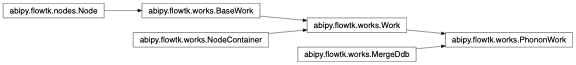

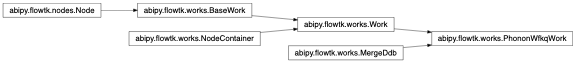

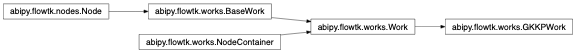

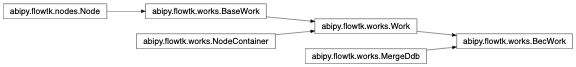

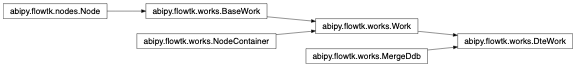

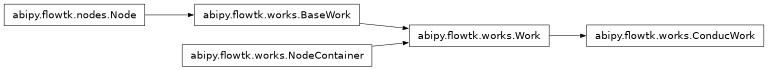

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, k0_list, step=0.01, npts=15, red_dirs=[[1, 0, 0], [0, 1, 0], [0, 0, 1]], ndivsm=-20, cart_dirs=None, den_node=None, manager=None) EffMassLineWork[source]¶

Build the Work from an

abipy.abio.inputs.AbinitInputrepresenting a GS-SCF calculation.- Parameters:

scf_input –

abipy.abio.inputs.AbinitInputfor GS-SCF used as template to generate the other inputs.k0_list – List with the reduced coordinates of the k-points where effective masses are wanted.

step – Step for finite difference in Angstrom^-1

npts – Number of points sampled around each k-point for each direction.

red_dirs – List of reduced directions used to generate the segments passing through the k-point

cart_dirs – List of Cartesian directions used to generate the segments passing through the k-point

den_node – Path to the DEN file or Task object producing a DEN file. Can be used to avoid the initial SCF calculation if a DEN file is already available. If None, a GS calculation is performed.

ndivsm – if > 0, it’s the number of divisions for the smallest segment of the path (Abinit variable). if < 0, it’s interpreted as the pymatgen line_density parameter in which the number of points in the segment is proportional to its length. Typical value: -20.

manager –

abipy.flowtk.tasks.TaskManagerinstance. Use default if None.

- class abipy.flowtk.effmass_works.EffMassDFPTWork(workdir=None, manager=None)[source]¶

Bases:

WorkWork for the computation of effective masses with DFPT. Requires explicit list of k-points and range of bands.

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, k0_list, effmass_bands_f90, ngfft=None, den_node=None, manager=None) EffMassDFPTWork[source]¶

Build the Work from an

abipy.abio.inputs.AbinitInputrepresenting a GS-SCF calculation.- Parameters:

scf_input –

abipy.abio.inputs.AbinitInputfor GS-SCF used as template to generate the other inputs.k0_list – List with the reduced coordinates of the k-points where effective masses are wanted.

effmass_bands_f90 – (nkpt, 2) array with band range for effmas computation. WARNING: Assumes Fortran convention with indices starting from 1.

ngfft – FFT divisions (3 integers). Used to enforce the same FFT mesh in the NSCF run as the one used for GS.

den_node – Path to the DEN file or Task object producing a DEN file. Can be used to avoid the initial SCF calculation if a DEN file is already available. If None, a GS calculation is performed.

manager –

abipy.flowtk.tasks.TaskManagerinstance. Use default if None.

- class abipy.flowtk.effmass_works.EffMassAutoDFPTWork(workdir=None, manager=None)[source]¶

Bases:

WorkWork for the automatic computation of effective masses with DFPT. Band extrema are automatically detected by performing a NSCF calculation along a high-symmetry k-path with ndivsm. Requires more computation that EffMassWork since input variables (kpoints and band range) are computed at runtime.

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, ndivsm=15, tolwfr=1e-20, den_node=None, manager=None) EffMassAutoDFPTWork[source]¶

Build the Work from an

abipy.abio.inputs.AbinitInputrepresenting a GS-SCF calculation.- Parameters:

scf_input –

abipy.abio.inputs.AbinitInputfor GS-SCF used as template to generate the other inputs.ndivsm – if > 0, it’s the number of divisions for the smallest segment of the path (Abinit variable). if < 0, it’s interpreted as the pymatgen line_density parameter in which the number of points in the segment is proportional to its length. Typical value: -20. This option is the recommended one if the k-path contains two high symmetry k-points that are very close as ndivsm > 0 may produce a very large number of wavevectors.

tolwfr – Tolerance on residuals for NSCF calculation

den_node – Path to the DEN file or Task object producing a DEN file. Can be used to avoid the initial SCF calculation if a DEN file is already available. If None, a GS calculation is performed.

manager –

abipy.flowtk.tasks.TaskManagerinstance. Use default if None.

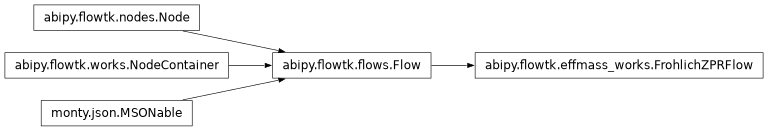

- class abipy.flowtk.effmass_works.FrohlichZPRFlow(workdir, manager=None, pickle_protocol=-1, remove=False)[source]¶

Bases:

FlowInheritance Diagram

- classmethod from_scf_input(workdir: str, scf_input: AbinitInput, ddb_node=None, ndivsm=15, tolwfr=1e-20, metadata=None, manager=None) FrohlichZPRFlow[source]¶

Build the Flow from an

abipy.abio.inputs.AbinitInputrepresenting a GS-SCF calculation. Final results are stored in the “zprfrohl_results.json” in the outdata directory of the flow.- Parameters:

workdir – Working directory.

scf_input –

abipy.abio.inputs.AbinitInputfor GS-SCF used as template to generate the other inputs.ddb_node – Path to an external DDB file that is used to avoid the calculation of BECS/eps_inf and phonons. If None, a DFPT calculation is automatically performed by the flow.

ndivsm – Number of divisions used to sample the smallest segment of the k-path.

tolwfr – Tolerance on residuals for NSCF calculation

manager –

abipy.flowtk.tasks.TaskManagerinstance. Use default if None.metadata – Dictionary with metadata addeded to the final JSON file.

eph_flows Module¶

Flows for electron-phonon calculations (high-level interface)

- class abipy.flowtk.eph_flows.EphPotFlow(workdir, manager=None, pickle_protocol=-1, remove=False)[source]¶

Bases:

FlowThis flow computes the e-ph scattering potentials on a q-mesh defined by ngqpt and a list of q-points (usually a q-path) specified by the user. The DFPT potentials on the q-mesh are merged in the DVDB located in the outdata of the second work while the DFPT potentials on the q-path are merged in the DVDB located in the outdata of the third work. These DVDB files are then passed to the EPH code to compute the average over the unit cell of the periodic part of the scattering potentials as a function of q. Results are stored in the V1QAVG.nc files of the outdata of the tasks in the fourth work.

- classmethod from_scf_input(workdir: str, scf_input: AbinitInput, ngqpt, qbounds, ndivsm=5, with_becs=True, with_quad=True, dvdb_add_lr_list=(0, 1, 2), ddb_filepath=None, dvdb_filepath=None, ddk_tolerance=None, prepgkk=0, manager=None) EphPotFlow[source]¶

Build the flow from an input file representing a GS calculation.

- Parameters:

workdir – Working directory.

scf_input – Input for the GS SCF run.

ngqpt – 3 integers defining the q-mesh.

qbounds – List of boundaries defining the q-path used for the computation of the GKQ files. The q-path is automatically generated using ndivsm and the reciprocal-space metric. If ndivsm is 0, the code assumes that qbounds contains the full list of q-points and no pre-processing is performed.

ndivsm – Number of points in the smallest segment of the path defined by qbounds. Use 0 to pass full list of q-points.

with_becs – Activate calculation of Electric field and Born effective charges.

with_quad – Activate calculation of dynamical quadrupoles. Require with_becs Note that only selected features are compatible with dynamical quadrupoles. Please consult <https://docs.abinit.org/topics/longwave/>

dvdb_add_lr_list – List of dvdb_add_lr values to consider in the interpolation.

ddb_filepath – Paths to the DDB/DVDB files that will be used to bypass the DFPT computation on the ngqpt mesh.

dvdb_filepath – Paths to the DDB/DVDB files that will be used to bypass the DFPT computation on the ngqpt mesh.

ddk_tolerance – dict {“varname”: value} with the tolerance used in the DDK run if with_becs.

prepgkk – 1 to activate computation of all 3 * natom perts (debugging option).

manager –

abipy.flowtk.tasks.TaskManagerobject.

- class abipy.flowtk.eph_flows.GkqPathFlow(workdir, manager=None, pickle_protocol=-1, remove=False)[source]¶

Bases:

FlowThis flow computes the gkq e-ph matrix elements <k+q|Delta V_q|k> for a list of q-points (usually a q-path). The results are stored in the GKQ.nc files for the different q-points. These files can be used to analyze the behaviour of the e-ph matrix elements as a function of qpts with the objects provided by the abipy.eph.gkq module. It is also possible to compute the e-ph matrix elements using the interpolated DFPT potentials if test_ft_interpolation is set to True.

- classmethod from_scf_input(workdir: str, scf_input: AbinitInput, ngqpt, qbounds, ndivsm=5, with_becs=True, with_quad=True, dvdb_add_lr_list=(0, 1, 2), ddb_filepath=None, dvdb_filepath=None, ddk_tolerance=None, test_ft_interpolation=False, prepgkk=0, manager=None) GkqPathFlow[source]¶

Build the flow from an input file representing a GS calculation.

- Parameters:

workdir – Working directory.

scf_input – Input for the GS SCF run.

ngqpt – 3 integers defining the q-mesh.

qbounds – List of boundaries defining the q-path used for the computation of the GKQ files. The q-path is automatically generated using ndivsm and the reciprocal-space metric. If ndivsm is 0, the code assumes that qbounds contains the full list of q-points and no pre-processing is performed.

ndivsm – Number of points in the smallest segment of the path defined by qbounds. Use 0 to pass list of q-points.

with_becs – Activate calculation of Electric field and Born effective charges.

with_quad – Activate calculation of dynamical quadrupoles. Require with_becs Note that only selected features are compatible with dynamical quadrupoles. Please consult <https://docs.abinit.org/topics/longwave/>

dvdb_add_lr_list – List of dvdb_add_lr values to consider in the interpolation.

ddb_filepath – Paths to the DDB/DVDB files that will be used to bypass the DFPT computation on the ngqpt mesh.

dvdb_filepath – Paths to the DDB/DVDB files that will be used to bypass the DFPT computation on the ngqpt mesh.

ddk_tolerance – dict {“varname”: value} with the tolerance used in the DDK run if with_becs.

test_ft_interpolation – True to add an extra Work in which the GKQ files are computed using the interpolated DFPT potentials and the q-mesh defined by ngqpt. The quality of the interpolation depends on the convergence of the BECS, epsinf and ngqpt. and the treatment of the LR part of the e-ph scattering potentials.

prepgkk – 1 to activate computation of all 3 * natom perts (debugging option).

manager –

abipy.flowtk.tasks.TaskManagerobject.

events Module¶

This module defines the events signaled by abinit during the execution. It also provides a parser to extract these events form the main output file and the log file.

- class abipy.flowtk.events.EventsParser[source]¶

Bases:

objectParses the output or the log file produced by ABINIT and extract the list of events.

- Error¶

alias of

EventsParserError

- abipy.flowtk.events.get_event_handler_classes(categories=None)[source]¶

Return the list of handler classes.

- class abipy.flowtk.events.ScfConvergenceWarning(src_file: str, src_line: int, message: str)[source]¶

Bases:

AbinitCriticalWarningWarning raised when the GS SCF cycle did not converge.

- yaml_tag: Any = '!ScfConvergenceWarning'¶

- class abipy.flowtk.events.NscfConvergenceWarning(src_file: str, src_line: int, message: str)[source]¶

Bases:

AbinitCriticalWarningWarning raised when the GS NSCF cycle did not converge.

- yaml_tag: Any = '!NscfConvergenceWarning'¶

- class abipy.flowtk.events.RelaxConvergenceWarning(src_file: str, src_line: int, message: str)[source]¶

Bases:

AbinitCriticalWarningWarning raised when the structural relaxation did not converge.

- yaml_tag: Any = '!RelaxConvergenceWarning'¶

- class abipy.flowtk.events.Correction(handler, actions, event, reset=False)[source]¶

Bases:

MSONable- classmethod from_dict(d: dict) Correction[source]¶

- Parameters:

d – Dict representation.

- Returns:

MSONable class.

- class abipy.flowtk.events.DilatmxError(src_file: str, src_line: int, message: str)[source]¶

Bases:

AbinitErrorThis Error occurs in variable cell calculations when the increase in the unit cell volume is too large.

- yaml_tag: Any = '!DilatmxError'¶

- class abipy.flowtk.events.DilatmxErrorHandler(max_dilatmx=1.3)[source]¶

Bases:

ErrorHandlerHandle DilatmxError. Abinit produces a netcdf file with the last structure before aborting The handler changes the structure in the input with the last configuration and modify the value of dilatmx.

- event_class¶

alias of

DilatmxError

- can_change_physics = False¶

- as_dict() dict[source]¶

Basic implementation of as_dict if __init__ has no arguments. Subclasses may need to overwrite.

- classmethod from_dict(d: dict) DilatmxErrorHandler[source]¶

Basic implementation of from_dict if __init__ has no arguments. Subclasses may need to overwrite.

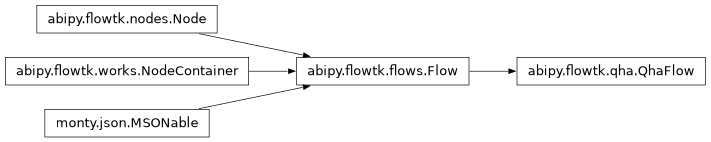

flows Module¶

A Flow is a container for Works, and works consist of tasks. Flows are the final objects that can be dumped directly to a pickle file on disk Flows are executed using abirun (abipy).

- class abipy.flowtk.flows.Flow(workdir, manager=None, pickle_protocol=-1, remove=False)[source]¶

Bases:

Node,NodeContainer,MSONableThis object is a container of work. Its main task is managing the possible inter-dependencies among the work and the creation of dynamic workflows that are generated by callbacks registered by the user.

- creation_date¶

String with the creation_date

- pickle_protocol¶

Protocol for Pickle database (default: -1 i.e. latest protocol)

Important methods for constructing flows:

register_work: register (add) a work to the flow resister_task: register a work that contains only this task returns the work allocate: propagate the workdir and manager of the flow to all the registered tasks build: build_and_pickle_dump:

- VERSION = '0.1'¶

- PICKLE_FNAME = '__AbinitFlow__.pickle'¶

- Error¶

alias of

FlowError

- Results¶

alias of

FlowResults

- classmethod from_inputs(workdir, inputs, manager=None, pickle_protocol=-1, task_class=<class 'abipy.flowtk.tasks.ScfTask'>, work_class=<class 'abipy.flowtk.works.Work'>, remove=False) Flow[source]¶

Construct a simple flow from a list of inputs. The flow contains a single Work with tasks whose class is given by task_class.

Warning

Don’t use this interface if you have dependencies among the tasks.

- Parameters:

workdir – String specifying the directory where the works will be produced.

inputs – List of inputs.

manager –

abipy.flowtk.tasks.TaskManagerobject responsible for the submission of the jobs. If manager is None, the object is initialized from the yaml file located either in the working directory or in the user configuration dir.pickle_protocol – Pickle protocol version used for saving the status of the object. -1 denotes the latest version supported by the python interpreter.

task_class – The class of the

abipy.flowtk.tasks.Task.work_class – The class of the

abipy.flowtk.works.Work.remove – attempt to remove working directory workdir if directory already exists.

- classmethod as_flow(obj: Any) Flow[source]¶

Convert obj into a Flow. Accepts filepath, dict, or Flow object.

- as_dict(**kwargs) dict[source]¶

JSON serialization, note that we only need to save a string with the working directory since the object will be reconstructed from the pickle file located in workdir

- to_dict(**kwargs) dict¶

JSON serialization, note that we only need to save a string with the working directory since the object will be reconstructed from the pickle file located in workdir

- classmethod temporary_flow(workdir=None, manager=None) Flow[source]¶

Return a Flow in a temporary directory. Useful for unit tests.

- set_status(status, msg: str) None[source]¶

Set and return the status of the flow

- Parameters:

status – Status object or string representation of the status

msg – string with human-readable message used in the case of errors.

- set_workdir(workdir: str, chroot=False) None[source]¶

Set the working directory. Cannot be set more than once unless chroot is True

- reload() None[source]¶

Reload the flow from the pickle file. Used when we are monitoring the flow executed by the scheduler. In this case, indeed, the flow might have been changed by the scheduler and we have to reload the new flow in memory.

- classmethod pickle_load(filepath: str, spectator_mode=True, remove_lock=False) Flow[source]¶

Loads the object from a pickle file and performs initial setup.

- Parameters:

filepath – Filename or directory name. It filepath is a directory, we scan the directory tree starting from filepath and we read the first pickle database. Raise RuntimeError if multiple databases are found.

spectator_mode – If True, the nodes of the flow are not connected by signals. This option is usually used when we want to read a flow in read-only mode and we want to avoid callbacks that can change the flow.

remove_lock – True to remove the file lock if any (use it carefully).

- classmethod from_file(filepath: str, spectator_mode=True, remove_lock=False) Flow¶

Loads the object from a pickle file and performs initial setup.

- Parameters:

filepath – Filename or directory name. It filepath is a directory, we scan the directory tree starting from filepath and we read the first pickle database. Raise RuntimeError if multiple databases are found.

spectator_mode – If True, the nodes of the flow are not connected by signals. This option is usually used when we want to read a flow in read-only mode and we want to avoid callbacks that can change the flow.

remove_lock – True to remove the file lock if any (use it carefully).

- change_manager(new_manager: Any) TaskManager[source]¶

Change the manager at runtime.

- get_panel(**kwargs)[source]¶

Build panel with widgets to interact with the

abipy.flowtk.flows.Floweither in a notebook or in panel app.

- property pyfile: str | None¶

Absolute path of the python script used to generate the flow. None if unset. Set by set_pyfile

- check_pid_file() int[source]¶

This function checks if we are already running the

abipy.flowtk.flows.Flowwith aPyFlowScheduler. Raises: Flow.Error if the pid file of the scheduler exists.

- property mongo_id¶

- get_mongo_info()[source]¶

Return a JSON dictionary with information on the flow. Mainly used for constructing the info section in FlowEntry. The default implementation is empty. Subclasses must implement it

- mongo_assimilate()[source]¶

This function is called by client code when the flow is completed Return a JSON dictionary with the most important results produced by the flow. The default implementation is empty. Subclasses must implement it

- property works: list[Work]¶

List of

abipy.flowtk.works.Workobjects contained in self..

- property status_counter¶

Returns a

Counterobject that counts the number of tasks with given status (use the string representation of the status as key).

- property ncores_reserved: int¶

Returns the number of cores reserved in this moment. A core is reserved if the task is not running but we have submitted the task to the queue manager.

- property ncores_allocated: int¶

Returns the number of cores allocated at this moment. A core is allocated if it’s running a task or if we have submitted a task to the queue manager but the job is still in pending state.

- property ncores_used: int¶

Returns the number of cores used at this moment. A core is used if there’s a job that is running on it.

- property has_chrooted: str¶

Returns a string that evaluates to True if we have changed the workdir for visualization purposes e.g. we are using sshfs. to mount the remote directory where the Flow is located. The string gives the previous workdir of the flow.

- chroot(new_workdir: str) None[source]¶

Change the workir of the

abipy.flowtk.flows.Flow. Mainly used for allowing the user to open the GUI on the local host and access the flow from remote via sshfs.Note

Calling this method will make the flow go in read-only mode.

- groupby_status() dict[source]¶

Returns dictionary mapping the task status to the list of named tuples (task, work_index, task_index).

- groupby_work_class() dict[source]¶

Returns a dictionary mapping the work class to the list of works in the flow

- groupby_task_class() dict[source]¶

Returns a dictionary mapping the task class to the list of tasks in the flow

- iflat_nodes(status=None, op='==', nids=None) Generator[None][source]¶

Generators that produces a flat sequence of nodes. if status is not None, only the tasks with the specified status are selected. nids is an optional list of node identifiers used to filter the nodes.

- iflat_tasks_wti(status=None, op='==', nids=None) Generator[tuple[Task, int, int]][source]¶

Generator to iterate over all the tasks of the Flow. :Yields: (task, work_index, task_index)

If status is not None, only the tasks whose status satisfies the condition (task.status op status) are selected status can be either one of the flags defined in the

abipy.flowtk.tasks.Taskclass (e.g Task.S_OK) or a string e.g “S_OK” nids is an optional list of node identifiers used to filter the tasks.

- iflat_tasks(status=None, op='==', nids=None) Generator[Task][source]¶

Generator to iterate over all the tasks of the

abipy.flowtk.flows.Flow.If status is not None, only the tasks whose status satisfies the condition (task.status op status) are selected status can be either one of the flags defined in the

abipy.flowtk.tasks.Taskclass (e.g Task.S_OK) or a string e.g “S_OK” nids is an optional list of node identifiers used to filter the tasks.

- abivalidate_inputs() tuple[source]¶

Run ABINIT in dry mode to validate all the inputs of the flow.

- Returns:

(isok, tuples)

isok is True if all inputs are ok. tuples is List of namedtuple objects, one for each task in the flow. Each namedtuple has the following attributes:

retcode: Return code. 0 if OK. log_file: log file of the Abinit run, use log_file.read() to access its content. stderr_file: stderr file of the Abinit run. use stderr_file.read() to access its content.

- Raises:

- check_dependencies() None[source]¶

Test the dependencies of the nodes for possible deadlocks and raise RuntimeError

- find_deadlocks()[source]¶

This function detects deadlocks

- Returns:

deadlocks, runnables, running

- Return type:

named tuple with the tasks grouped in

- check_status(**kwargs) None[source]¶

Check the status of the works in self.

- Parameters:

show – True to show the status of the flow.

kwargs – keyword arguments passed to show_status

- fix_abicritical() int[source]¶

This function tries to fix critical events originating from ABINIT. Returns the number of tasks that have been fixed.

- fix_queue_critical() int[source]¶

This function tries to fix critical events originating from the queue submission system.

Returns the number of tasks that have been fixed.

- show_info(**kwargs) None[source]¶

Print info on the flow i.e. total number of tasks, works, tasks grouped by class.

Example

Task Class Number ———— ——– ScfTask 1 NscfTask 1 ScrTask 2 SigmaTask 6

- compare_abivars(varnames, nids=None, wslice=None, printout=False, with_colors=False) DataFrame[source]¶

Print the input of the tasks to the given stream.

- Parameters:

varnames – List of Abinit variables. If not None, only the variable in varnames are selected and printed.

nids – List of node identifiers. By defaults all nodes are shown

wslice – Slice object used to select works.

printout – True to print dataframe.

with_colors – True if task status should be colored.

- get_dims_dataframe(nids=None, printout=False, with_colors=False) DataFrame[source]¶

Analyze output files produced by the tasks. Print pandas DataFrame with dimensions.

- Parameters:

nids – List of node identifiers. By defaults all nodes are shown

printout – True to print dataframe.

with_colors – True if task status should be colored.

- write_fix_flow_script() None[source]¶

Write python script in the flow workdir that can be used by expert users to change the input variables of the task according to their status.

- compare_structures(nids=None, with_spglib=False, what='io', verbose=0, precision=3, printout=False, with_colors=False)[source]¶

Analyze structures of the tasks (input and output structures if it’s a relaxation task. Print pandas DataFrame

- Parameters:

nids – List of node identifiers. By defaults all nodes are shown

with_spglib – If True, spglib is invoked to get the spacegroup symbol and number

what (str) – “i” for input structures, “o” for output structures.

precision – Floating point output precision (number of significant digits). This is only a suggestion

printout – True to print dataframe.

with_colors – True if task status should be colored.

- compare_ebands(nids=None, with_path=True, with_ibz=True, with_spglib=False, verbose=0, precision=3, printout=False, with_colors=False) tuple[source]¶

Analyze electron bands produced by the tasks. Return pandas DataFrame and

abipy.electrons.ebands.ElectronBandsPlotter.- Parameters:

nids – List of node identifiers. By default, all nodes are shown

with_path – Select files with ebands along k-path.

with_ibz – Select files with ebands in the IBZ.

with_spglib – If True, spglib is invoked to get the spacegroup symbol and number

precision – Floating point output precision (number of significant digits). This is only a suggestion

printout – True to print dataframe.

with_colors – True if task status should be colored.

Return: (df, ebands_plotter)

- compare_hist(nids=None, with_spglib=False, verbose=0, precision=3, printout=False, with_colors=False) tuple[source]¶

Analyze HIST nc files produced by the tasks. Print pandas DataFrame with final results. Return: (df, hist_plotter)

- Parameters:

nids – List of node identifiers. By defaults all nodes are shown

with_spglib – If True, spglib is invoked to get the spacegroup symbol and number

precision – Floating point output precision (number of significant digits). This is only a suggestion

printout – True to print dataframe.

with_colors – True if task status should be colored.

- show_summary(**kwargs) None[source]¶

Print a short summary with the status of the flow and a counter task_status –> number_of_tasks

- Parameters:

stream – File-like object, Default: sys.stdout

Example

Status Count ——— ——- Completed 10

<Flow, node_id=27163, workdir=flow_gwconv_ecuteps>, num_tasks=10, all_ok=True

- get_dataframe(as_dict=False) DataFrame[source]¶

Return pandas dataframe task info or dictionary if as_dict is True. This function should be called after flow.get_status to update the status.

- show_status(return_df=False, **kwargs)[source]¶

Report the status of the works and the status of the different tasks on the specified stream.

- Parameters:

stream – File-like object, Default: sys.stdout

nids – List of node identifiers. By defaults all nodes are shown

wslice – Slice object used to select works.

verbose – Verbosity level (default 0). > 0 to show only the works that are not finalized.

- Returns:

task –> dict(report=report, timedelta=timedelta)

- Return type:

data_task dictionary with mapping

- show_events(status=None, nids=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>)[source]¶

Print the Abinit events (ERRORS, WARNIING, COMMENTS) to stdout

- Parameters:

status – if not None, only the tasks with this status are select

nids – optional list of node identifiers used to filter the tasks.

stream – File-like object, Default: sys.stdout

- Returns:

task –> report

- Return type:

data_task dictionary with mapping

- show_corrections(status=None, nids=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) int[source]¶

Show the corrections applied to the flow at run-time.

- Parameters:

status – if not None, only the tasks with this status are select.

nids – optional list of node identifiers used to filter the tasks.

stream – File-like object, Default: sys.stdout

Return: The number of corrections found.

- show_history(status=None, nids=None, full_history=False, metadata=False, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) None[source]¶

Print the history of the flow to stream

- Parameters:

status – if not None, only the tasks with this status are select

full_history – Print full info set, including nodes with an empty history.

nids – optional list of node identifiers used to filter the tasks.

metadata – print history metadata (experimental)

stream – File-like object, Default: sys.stdout

- show_inputs(varnames=None, nids=None, wslice=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) None[source]¶

Print the input of the tasks to the given stream.

- Parameters:

varnames – List of Abinit variables. If not None, only the variable in varnames are selected and printed.

nids – List of node identifiers. By defaults all nodes are shown

wslice – Slice object used to select works.

stream – File-like object, Default: sys.stdout

- listext(ext, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) None[source]¶

Print to the given stream a table with the list of the output files with the given ext produced by the flow.

- select_tasks(nids=None, wslice=None, task_class=None) list[Task][source]¶

Return a list with a subset of tasks.

- Parameters:

nids – List of node identifiers.

wslice – Slice object used to select works.

task_class – String or class used to select tasks. Ignored if None.

Note

nids and wslice are mutually exclusive. If no argument is provided, the full list of tasks is returned.

- get_task_scfcycles(nids=None, wslice=None, task_class=None, exclude_ok_tasks=False) list[Task][source]¶

Return list of (taks, scfcycle) tuples for all the tasks in the flow with a SCF algorithm e.g. electronic GS-SCF iteration, DFPT-SCF iterations etc.

- Parameters:

nids – List of node identifiers.

wslice – Slice object used to select works.

task_class – String or class used to select tasks. Ignored if None.

exclude_ok_tasks – True if only running tasks should be considered.

- Returns:

List of ScfCycle subclass instances.

- show_tricky_tasks(verbose=0, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) None[source]¶

Print list of tricky tasks i.e. tasks that have been restarted or launched more than once or tasks with corrections.

- Parameters:

verbose – Verbosity level. If > 0, task history and corrections (if any) are printed.

stream – File-like object. Default: sys.stdout

- inspect(nids=None, wslice=None, **kwargs)[source]¶

Inspect the tasks (SCF iterations, Structural relaxation …) and produces matplotlib plots.

- Parameters:

nids – List of node identifiers.

wslice – Slice object used to select works.

kwargs – keyword arguments passed to task.inspect method.

Note

nids and wslice are mutually exclusive. If nids and wslice are both None, all tasks in self are inspected.

- Returns:

List of matplotlib figures.

- look_before_you_leap() str[source]¶

This method should be called before running the calculation to make sure that the most important requirements are satisfied.

Return: string with inconsistencies/errors.

- tasks_from_nids(nids) list[Task][source]¶

Return the list of tasks associated to the given list of node identifiers (nids).

Note

Invalid ids are ignored

- wti_from_nids(nids) list[Task][source]¶

Return the list of (w, t) indices from the list of node identifiers nids.

- open_files(what='o', status=None, op='==', nids=None, editor=None)[source]¶

Open the files of the flow inside an editor (command line interface).

- Parameters:

what –

string with the list of characters selecting the file type Possible choices:

i ==> input_file, o ==> output_file, f ==> files_file, j ==> job_file, l ==> log_file, e ==> stderr_file, q ==> qout_file, all ==> all files.

status – if not None, only the tasks with this status are select

op – status operator. Requires status. A task is selected if task.status op status evaluates to true.

nids – optional list of node identifiers used to filter the tasks.

editor – Select the editor. None to use the default editor ($EDITOR shell env var)

- parse_timing(nids=None)[source]¶

Parse the timer data in the main output file(s) of Abinit. Requires timopt /= 0 in the input file, usually timopt = -1.

- Parameters:

nids – optional list of node identifiers used to filter the tasks.

Return:

AbinitTimerParserinstance, None if error.

- show_abierrors(nids=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>)[source]¶

Write to the given stream the list of ABINIT errors for all tasks whose status is S_ABICRITICAL.

- Parameters:

nids – optional list of node identifiers used to filter the tasks.

stream – File-like object. Default: sys.stdout

- show_qouts(nids=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>)[source]¶

Write to the given stream the content of the queue output file for all tasks whose status is S_QCRITICAL.

- Parameters:

nids – optional list of node identifiers used to filter the tasks.

stream – File-like object. Default: sys.stdout

- debug(status=None, nids=None, stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>)[source]¶

This method is usually used when the flow didn’t completed succesfully It analyzes the files produced the tasks to facilitate debugging. Info are printed to stdout.

- Parameters:

status – If not None, only the tasks with this status are selected

nids – optional list of node identifiers used to filter the tasks.

stream – File-like object. Default: sys.stdout

- cancel(nids=None) int[source]¶

Cancel all the tasks that are in the queue. nids is an optional list of node identifiers used to filter the tasks.

- Returns:

Number of jobs cancelled, negative value if error

- get_njobs_in_queue(username=None) int[source]¶

Returns the number of jobs in the queue, None when the number of jobs cannot be determined.

- Parameters:

username – (str) the username of the jobs to count (default is to autodetect)

- build_and_pickle_dump(abivalidate=False)[source]¶

Build dirs and file of the Flow and save the object in pickle format. Returns 0 if success

- Parameters:

abivalidate – If True, all the input files are validate by calling the abinit parser. If the validation fails, ValueError is raise.

- pickle_dumps(protocol=None)[source]¶

Return a string with the pickle representation. protocol selects the pickle protocol. self.pickle_protocol is used if protocol is None

- register_task(input: AbinitInput, deps=None, manager=None, task_class=None, append=False) Work[source]¶

Utility function that generates a Work made of a single task

- Parameters:

input –

abipy.abio.inputs.AbinitInputdeps – List of

Dependencyobjects specifying the dependency of this node. An empy list of deps implies that this node has no dependencies.manager – The

abipy.flowtk.tasks.TaskManagerresponsible for the submission of the task. If manager is None, we use theabipy.flowtk.tasks.TaskManagerspecified during the creation of the work.task_class – Task subclass to instantiate. Default:

abipy.flowtk.tasks.AbinitTaskappend – If true, the task is added to the last work (a new Work is created if flow is empty)

- Returns:

The generated

abipy.flowtk.works.Workfor the task, work[0] is the actual task.

- new_work(deps=None, manager=None, workdir=None) Work[source]¶

Helper function to add a new empty

abipy.flowtk.works.Workand add it to the internal list. Client code is responsible for filling the new work.- Parameters:

deps – List of

Dependencyobjects specifying the dependency of this node. An empy list of deps implies that this node has no dependencies.manager – The

abipy.flowtk.tasks.TaskManagerresponsible for the submission of the task. If manager is None, we use the TaskManager specified during the creation of the work.workdir – The name of the directory used for the

abipy.flowtk.works.Work.

- Returns:

The registered

abipy.flowtk.works.Work.

- register_work(work: Work, deps=None, manager=None, workdir=None) Work[source]¶

Register a new

abipy.flowtk.works.Workand add it to the internal list, taking into account possible dependencies.- Parameters:

work –

abipy.flowtk.works.Workobject.deps – List of

Dependencyobjects specifying the dependency of this node. An empy list of deps implies that this node has no dependencies.manager – The

abipy.flowtk.tasks.TaskManagerresponsible for the submission of the task. If manager is None, we use the TaskManager specified during the creation of the work.workdir – The name of the directory used for the

abipy.flowtk.works.Work.

Returns: The registered

abipy.flowtk.works.Work.

- register_work_from_cbk(cbk_name, cbk_data, deps, work_class, manager=None)[source]¶

Registers a callback function that will generate the

Taskof theWork.- Parameters:

cbk_name – Name of the callback function (must be a bound method of self)

cbk_data – Additional data passed to the callback function.

deps – List of

Dependencyobjects specifying the dependency of the work.work_class –

abipy.flowtk.works.Workclass to instantiate.manager – The

abipy.flowtk.tasks.TaskManagerresponsible for the submission of the task. If manager is None, we use the TaskManager specified during the creation of theabipy.flowtk.flows.Flow.

- Returns:

The

abipy.flowtk.works.Workthat will be finalized by the callback.

- allocate(workdir=None, use_smartio: bool = False, build: bool = False) Flow[source]¶

Allocate the Flow i.e. assign the workdir and (optionally) the

abipy.flowtk.tasks.TaskManagerto the different tasks in the Flow.- Parameters:

workdir – Working directory of the flow. Must be specified here if we haven’t initialized the workdir in the __init__.

build – True to build the flow and save status to pickle file.

- use_smartio()[source]¶

This function should be called when the entire Flow has been built. It tries to reduce the pressure on the hard disk by using Abinit smart-io capabilities for those files that are not needed by other nodes. Smart-io means that big files (e.g. WFK) are written only if the calculation is unconverged so that we can restart from it. No output is produced if convergence is achieved.

Return: self

- show_dependencies(stream=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>) None[source]¶

Writes to the given stream the ASCII representation of the dependency tree.

- on_all_ok()[source]¶

This method is called when all the works in the flow have reached S_OK. This method shall return True if the calculation is completed or False if the execution should continue due to side-effects such as adding a new work to the flow.

This methods allows subclasses to implement customized logic such as extending the flow by adding new works. The flow has an internal counter: on_all_ok_num_calls that shall be incremented by client code when subclassing this method. This counter can be used to decide if futher actions are needed or not.

An example of flow that adds a new work (only once) when all_ok is reached for the first time:

- def on_all_ok(self):

if self.on_all_ok_num_calls > 0: return True self.on_all_ok_num_calls += 1

self.register_work(work) self.allocate() self.build_and_pickle_dump()

# The scheduler will keep on running the flow. return False

- set_garbage_collector(exts=None, policy='task') None[source]¶

Enable the garbage collector that will remove the big output files that are not needed.

- Parameters:

exts – string or list with the Abinit file extensions to be removed. A default is provided if exts is None

policy – Either flow or task. If policy is set to ‘task’, we remove the output files as soon as the task reaches S_OK. If ‘flow’, the files are removed only when the flow is finalized. This option should be used when we are dealing with a dynamic flow with callbacks generating other tasks since a

abipy.flowtk.tasks.Taskmight not be aware of its children when it reached S_OK.

- connect_signals() None[source]¶

Connect the signals within the Flow. The Flow is responsible for catching the important signals raised from its works.

- set_spectator_mode(mode=True) None[source]¶

When the flow is in spectator_mode, we have to disable signals, pickle dump and possible callbacks A spectator can still operate on the flow but the new status of the flow won’t be saved in the pickle file. Usually the flow is in spectator mode when we are already running it via the scheduler or other means and we should not interfere with its evolution. This is the reason why signals and callbacks must be disabled. Unfortunately preventing client-code from calling methods with side-effects when the flow is in spectator mode is not easy (e.g. flow.cancel will cancel the tasks submitted to the queue and the flow used by the scheduler won’t see this change!

- rapidfire(check_status=True, max_nlaunch=-1, max_loops=1, sleep_time=5, **kwargs)[source]¶

Use

PyLauncherto submits tasks in rapidfire mode. kwargs contains the options passed to the launcher.- Parameters:

check_status

max_nlaunch – Maximum number of launches. default: no limit.

max_loops – Maximum number of loops

sleep_time – seconds to sleep between rapidfire loop iterations

Return: Number of tasks submitted.

- single_shot(check_status=True, **kwargs)[source]¶

Use

PyLauncherto submit one task. kwargs contains the options passed to the launcher.Return: Number of tasks submitted.

- make_scheduler(**kwargs)[source]¶

Build and return a

PyFlowSchedulerto run the flow.- Parameters:

kwargs – if empty we use the user configuration file. if filepath in kwargs we init the scheduler from filepath. else pass kwargs to

PyFlowScheduler__init__ method.

- make_light_tarfile(name=None)[source]¶

Lightweight tarball file. Mainly used for debugging. Return the name of the tarball file.

- make_tarfile(name=None, max_filesize=None, exclude_exts=None, exclude_dirs=None, verbose=0, **kwargs)[source]¶

Create a tarball file.

- Parameters:

name – Name of the tarball file. Set to os.path.basename(flow.workdir) + “tar.gz”` if name is None.

max_filesize (int or string with unit) – a file is included in the tar file if its size <= max_filesize Can be specified in bytes e.g. max_files=1024 or with a string with unit e.g. max_filesize=”1 MB”. No check is done if max_filesize is None.

exclude_exts – List of file extensions to be excluded from the tar file.

exclude_dirs – List of directory basenames to be excluded.

verbose (int) – Verbosity level.

kwargs – keyword arguments passed to the

TarFileconstructor.

Returns: The name of the tarfile.

- explain(what='all', nids=None, verbose=0) str[source]¶

Return string with the docstrings of the works/tasks in the Flow grouped by class.

- Parameters:

what – “all” to print all nodes, “works” for Works only, “tasks” for tasks only.

nids – list of node identifiers used to filter works or tasks.

verbose – Verbosity level

- show_autoparal(nids=None, verbose=0) None[source]¶

Print to terminal the autoparal configurations for each task in the Flow.

- Parameters:

nids – list of node identifiers used to filter works or tasks.

verbose – Verbosity level

- get_graphviz(engine='automatic', graph_attr=None, node_attr=None, edge_attr=None)[source]¶

Generate flow graph in the DOT language.

- Parameters:

engine – Layout command used. [‘dot’, ‘neato’, ‘twopi’, ‘circo’, ‘fdp’, ‘sfdp’, ‘patchwork’, ‘osage’]

graph_attr – Mapping of (attribute, value) pairs for the graph.

node_attr – Mapping of (attribute, value) pairs set for all nodes.

edge_attr – Mapping of (attribute, value) pairs set for all edges.

Returns: graphviz.Digraph <https://graphviz.readthedocs.io/en/stable/api.html#digraph>

- graphviz_imshow(ax=None, figsize=None, dpi=300, fmt='png', **kwargs) Any[source]¶

Generate flow graph in the DOT language and plot it with matplotlib.

- Parameters:

ax –

matplotlib.axes.Axesor None if a new figure should be created.figsize – matplotlib figure size (None to use default)

dpi – DPI value.

fmt – Select format for output image

Return:

matplotlib.figure.FigureKeyword arguments controlling the display of the figure:

kwargs

Meaning

title

Title of the plot (Default: None).

show

True to show the figure (default: True).

savefig

“abc.png” or “abc.eps” to save the figure to a file.

size_kwargs

Dictionary with options passed to fig.set_size_inches e.g. size_kwargs=dict(w=3, h=4)

tight_layout

True to call fig.tight_layout (default: False)

ax_grid

True (False) to add (remove) grid from all axes in fig. Default: None i.e. fig is left unchanged.

ax_annotate

Add labels to subplots e.g. (a), (b). Default: False

fig_close

Close figure. Default: False.

plotly

Try to convert mpl figure to plotly.

- plot_networkx(mode='network', with_edge_labels=False, ax=None, arrows=False, node_size='num_cores', node_label='name_class', layout_type='spring', **kwargs) Any[source]¶

Use networkx to draw the flow with the connections among the nodes and the status of the tasks.

- Parameters:

mode – networkx to show connections, status to group tasks by status.

with_edge_labels – True to draw edge labels.

ax –

matplotlib.axes.Axesor None if a new figure should be created.arrows – if True draw arrowheads.

node_size – By default, the size of the node is proportional to the number of cores used.

node_label – By default, the task class is used to label node.

layout_type – Get positions for all nodes using layout_type. e.g. pos = nx.spring_layout(g)

Warning

Requires networkx package.

Keyword arguments controlling the display of the figure:

kwargs

Meaning

title

Title of the plot (Default: None).

show

True to show the figure (default: True).

savefig

“abc.png” or “abc.eps” to save the figure to a file.

size_kwargs

Dictionary with options passed to fig.set_size_inches e.g. size_kwargs=dict(w=3, h=4)

tight_layout

True to call fig.tight_layout (default: False)

ax_grid

True (False) to add (remove) grid from all axes in fig. Default: None i.e. fig is left unchanged.

ax_annotate

Add labels to subplots e.g. (a), (b). Default: False

fig_close

Close figure. Default: False.

plotly

Try to convert mpl figure to plotly.

- class abipy.flowtk.flows.G0W0WithQptdmFlow(workdir, scf_input, nscf_input, scr_input, sigma_inputs, manager=None)[source]¶

Bases:

Flow

- abipy.flowtk.flows.bandstructure_flow(workdir: str, scf_input: AbinitInput, nscf_input: AbinitInput, dos_inputs=None, manager=None, flow_class=<class 'abipy.flowtk.flows.Flow'>, allocate=True)[source]¶

Build a

abipy.flowtk.flows.Flowfor band structure calculations.- Parameters:

workdir – Working directory.

scf_input – Input for the GS SCF run.

nscf_input – Input for the NSCF run (band structure run).

dos_inputs – Input(s) for the NSCF run (dos run).

manager –

abipy.flowtk.tasks.TaskManagerobject used to submit the jobs. Initialized from manager.yml if manager is None.flow_class – Flow subclass

allocate – True if the flow should be allocated before returning.

Returns:

abipy.flowtk.flows.Flowobject

- abipy.flowtk.flows.g0w0_flow(workdir: str, scf_input: AbinitInput, nscf_input: AbinitInput, scr_input: AbinitInput, sigma_inputs, manager=None, flow_class=<class 'abipy.flowtk.flows.Flow'>, allocate=True)[source]¶

Build a

abipy.flowtk.flows.Flowfor one-shot $G_0W_0$ calculations.- Parameters:

workdir – Working directory.

scf_input – Input for the GS SCF run.

nscf_input – Input for the NSCF run (band structure run).

scr_input – Input for the SCR run.

sigma_inputs – List of inputs for the SIGMA run.

flow_class – Flow class

manager –

abipy.flowtk.tasks.TaskManagerobject used to submit the jobs. Initialized from manager.yml if manager is None.allocate – True if the flow should be allocated before returning.

Returns:

abipy.flowtk.flows.Flowobject

gw_works Module¶

Works and Flows for GW calculations with the quartic-scaling implementation.

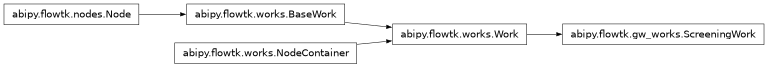

- class abipy.flowtk.gw_works.ScreeningWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work parallelizes the calculation of the q-points of the screening. It also provides the callback on_all_ok that calls the mrgscr tool to merge all the partial SCR files produced.

The final SCR file is available in the outdata directory of the work. To use this SCR file as dependency of other tasks, use the syntax:

deps={scr_work: “SCR”}

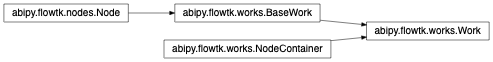

Inheritance Diagram

- classmethod from_nscf_task(nscf_task: NscfTask, scr_input, manager=None) ScreeningWork[source]¶

Construct a ScreeningWork from a

abipy.flowtk.tasks.NscfTaskobject.- Parameters:

nscf_task –

abipy.flowtk.tasks.NscfTaskobject which produced the WFK file with empty statesscr_input –

abipy.abio.inputs.AbinitInputobject representing a SCREENING calculation.manager –

abipy.flowtk.tasks.TaskManagerobject.

- classmethod from_wfk_filepath(wfk_filepath, scr_input, manager=None) ScreeningWork[source]¶

Construct a ScreeningWork from a WFK filepath and a screening input.

WARNING: the parameters reported in scr_input e.g. k-mesh, nband, ecut etc must be consisten with those used to generate the WFK file. No consistencty check is done at this level. You have been warned!

- Parameters:

wfk_filepath – Path to the WFK file.

scr_input –

abipy.abio.inputs.AbinitInputobject representing a SCREENING calculation.manager –

abipy.flowtk.tasks.TaskManagerobject.

- merge_scr_files(remove_scrfiles=True, verbose=0) str[source]¶

This method is called when all the q-points have been computed. It runs mrgscr in sequential on the local machine to produce the final SCR file in the outdir of the Work. If remove_scrfiles is True, the partial SCR files are removed after the merge.

gwr_works Module¶

Works and Flows for GWR calculations (GW with supercells).

NB: An Abinit build with Scalapack is required to run GWR.

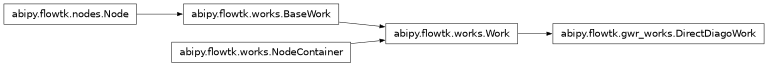

- class abipy.flowtk.gwr_works.DirectDiagoWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work performs the direct diagonalization of the KS Hamiltonian using the density produced by a GS-SCF run and produces a WFK file with empty states in the outdir of the second task.

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, green_nband: int, manager: TaskManager = None) DirectDiagoWork[source]¶

Build object from an input representing a GS-SCF calculation.

- Parameters:

scf_input – Input for the GS-SCF calculation.

green_nband – Number of bands to compute in the direct diagonalization. A negative value activate full diagonalization with nband equal to the number of PWs.

- class abipy.flowtk.gwr_works.GWRSigmaConvWork(workdir=None, manager=None)[source]¶

Bases:

_BaseGWRWorkThis work performs multiple QP calculations with the GWR code and produces xlsx files in its outdata directory with the QP results obtained with the different parameters.

Inheritance Diagram

- class abipy.flowtk.gwr_works.GWRChiCompareWork(workdir=None, manager=None)[source]¶

Bases:

_BaseGWRWorkThis work computes the irreducibile polarizability along the imaginary axis using the GWR code and the quartic-scaling algorithm using the same minimax mesh so that one can compare the two quantities.

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, gwr_ntau, nband, ecuteps, den_node: Node, wfk_node: Node, gwr_kwargs=None, scr_kwargs=None, manager: TaskManager = None)[source]¶

Build Work from an input for GS-SCF calculation

- Parameters:

scf_input – input for GS run.

gwr_ntau – Number of points in minimax mesh.

nband – Number of bands to build G and chi.

ecuteps – Cutoff energy for chi.

den_node – The Node who produced the DEN file.

wfk_node – The Node who produced the WFK file.

gwr_kwargs – Extra kwargs used to build the GWR input.

scr_kwargs – Extra kwargs used to build the SCR input.

manager – Abipy Task Manager.

- class abipy.flowtk.gwr_works.GWRRPAConvWork(workdir=None, manager=None)[source]¶

Bases:

_BaseGWRWorkThis work computes the RPA correlated energy for different number of points in the minimax mesh.

- classmethod from_scf_input_ntaus(scf_input: AbinitInput, gwr_ntau_list, nband, ecuteps, den_node: Node, wfk_node: Node, gwr_kwargs=None, manager: TaskManager = None)[source]¶

Build Work from an input for GS-SCF calculation

- Parameters:

scf_input – input for GS run.

gwr_ntau_list – List with number of points in minimax mesh.

nband – Number of bands to build G and chi.

ecuteps – Cutoff energy for chi.

den_node – The Node who produced the DEN file.

wfk_node – The Node who produced the WFK file.

gwr_kwargs – Extra kwargs used to build the GWR input.

manager – Abipy Task Manager.

gruneisen Module¶

Work for computing the Grüneisen parameters with finite differences of DFPT phonons.

WARNING: This code must be tested more carefully.

- class abipy.flowtk.gruneisen.GruneisenWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis work computes the Grüneisen parameters (derivative of frequencies wrt volume) using finite differences and the phonons obtained with the DFPT part of Abinit. The Anaddb input file needed to compute Grüneisen parameters will be generated in the outdata directory of the flow.

It is necessary to run three DFPT phonon calculations. One is calculated at the equilibrium volume and the remaining two are calculated at the slightly larger volume and smaller volume than the equilibrium volume. The unitcells at these volumes have to be fully relaxed under the constraint of each volume. This Work automates the entire procedure starting from an input for GS calculations.

- classmethod from_gs_input(gs_inp, voldelta, ngqpt, tolerance=None, with_becs=False, ddk_tolerance=None, workdir=None, manager=None) GruneisenWork[source]¶

Build the work from an

abipy.abio.inputs.AbinitInputrepresenting a GS calculations.- Parameters:

gs_inp –

abipy.abio.inputs.AbinitInputrepresenting a GS calculation in the initial unit cell.voldelta – Absolute increment for unit cell volume. The three volumes are: [v0 - voldelta, v0, v0 + voldelta] where v0 is taken from gs_inp.structure.

ngqpt – three integers defining the q-mesh for phonon calculations.

tolerance – dict {“varname”: value} with the tolerance to be used in the phonon run. None to use AbiPy default.

with_becs – Activate calculation of Electric field and Born effective charges.

ddk_tolerance – dict {“varname”: value} with the tolerance used in the DDK run if with_becs. None to use AbiPy default.

gs_works Module¶

Work subclasses related to GS calculations.

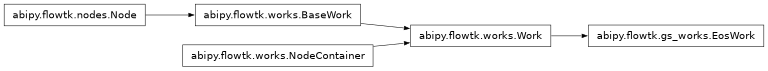

- class abipy.flowtk.gs_works.EosWork(workdir=None, manager=None)[source]¶

Bases:

WorkWork to compute the Equation of State. The EOS is obtained by computing E(V) for several volumes around the input V0, The initial volumes are obtained by rescaling the input lattice vectors so that length proportions and angles are preserved. This guess is exact for cubic materials while other Bravais lattices require a constant-volume optimization of the cell geometry.

If lattice_type==”cubic” and atomic positions are fixed by symmetry. use can use move_atoms=False to perform standard GS-SCF calculations. In all the other cases, E(V) is obtained by relaxing the atomic positions at fixed volume.

The E(V) points are fitted at the end of the work and the results are saved in the eos_data.json file produced in the outdata directory. The file contains the energies, the volumes and the values of V0, B0, B1 obtained with different EOS models.

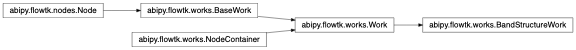

Inheritance Diagram

- classmethod from_scf_input(scf_input: AbinitInput, npoints=4, deltap_vol=0.25, ecutsm=0.5, move_atoms=True, manager=None) EosWork[source]¶

Build an EosWork from an AbinitInput for GS-SCF.

- Parameters:

scf_input – AbinitInput for GS-SCF used as template to generate the other inputs.

npoints – Number of volumes generated on the right (left) of the equilibrium volume The total number of points is therefore 2 * n + 1.

deltap_vol – Step of the linear mesh given in relative percentage of the equilibrium volume The step is thus: v0 * deltap_vol / 100.

ecutsm – Value of ecutsm input variable. If scf_input does not provide ecutsm, this value will be used else the vale in scf_input.

move_atoms – If True, a structural relaxation of ions is performed for each volume This is needed if the atomic positions are non fixed by symmetry.

manager – TaskManager instance. Use default if None.

- classmethod from_inputs(inputs: list[AbinitInput], manager=None) EosWork[source]¶

Advanced interface to build an EosWork from an list of AbinitInputs.

launcher Module¶

Tools for the submission of Tasks.

- class abipy.flowtk.launcher.ScriptEditor[source]¶

Bases:

objectSimple editor to simplify the writing of shell scripts

- class abipy.flowtk.launcher.PyLauncher(flow: Flow, **kwargs)[source]¶

Bases:

objectThis object handle the submission of the tasks contained in a

abipy.flowtk.flows.Flow.- Error¶

alias of

PyLauncherError

- single_shot()[source]¶

Run the first

Taskthan is ready for execution.- Returns:

Number of jobs launched.

- rapidfire(max_nlaunch=-1, max_loops=1, sleep_time=5)[source]¶

Keeps submitting Tasks until we are out of jobs or no job is ready to run.

- Parameters:

max_nlaunch – Maximum number of launches. default: no limit.

max_loops – Maximum number of loops

sleep_time – seconds to sleep between rapidfire loop iterations

- Returns:

The number of tasks launched.

- class abipy.flowtk.launcher.PyFlowScheduler(**kwargs)[source]¶

Bases:

BaseScheduler- property pid_file: str¶

Absolute path of the file with the pid. The file is located in the workdir of the flow

lumi_works Module¶

Work subclasses for the computation of luminiscent properties.

- class abipy.flowtk.lumi_works.LumiWork(workdir=None, manager=None)[source]¶

Bases:

WorkThis Work implements Fig 1 of https://arxiv.org/abs/2010.00423.

Client code is responsible for the preparation of the supercell and of the GS SCF input files with the fixed electronic occupations associated to the two configurations. By default, the work computes the two relaxed structures and the four total energies corresponding to the Ag, Ag*, Ae*, Ae configurations. Optionally, one can activate the computation of four electronic band structures. See docstring of from_scf_inputs for further info.